At some point, you will likely need to release changes that contain more than just schema changes. You may need to update data in a table, remove data that could cause a problem, or insert some data to support a code release. To support these changes, SSDT provides support for Pre-Deploy and Post-Deploy scripts.

These scripts are not created by default in a new SSDT project. To organize your scripts, you may want to create a folder structure similar to the following.

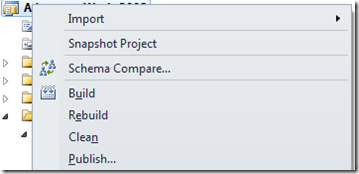

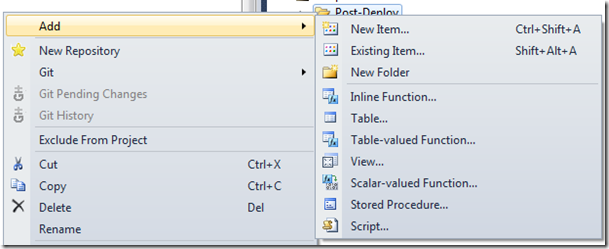

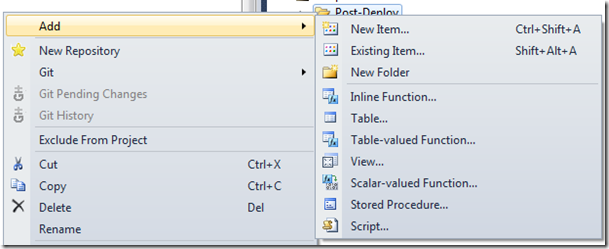

If you right-click the Post or Pre Deploy folders, you can choose to add a Script item.

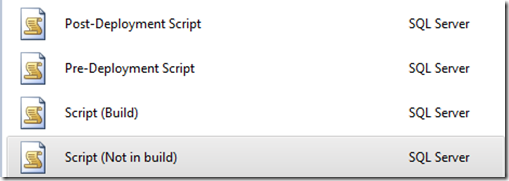

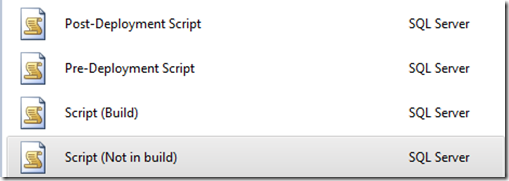

You will then get a choice of which type of script to include. Choose Pre or Post deployment, as appropriate, and name the file accordingly.

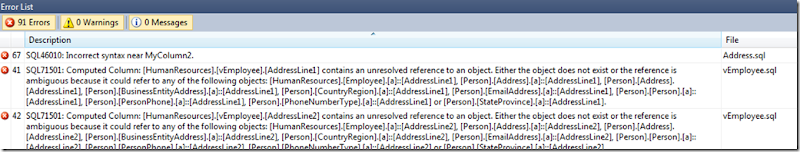

You can only have one Pre-Deployment script and one Post-Deployment script per project as part of the Build! All included scripts must be set to “Not in build” or you will likely have errors.

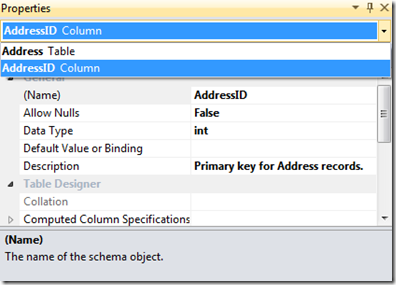

If you look at the properties of these scripts, you will see that the Build Action is set to PreDeploy or PostDeploy. Opening the Post-Deploy script will show this default text.

/*

Post-Deployment Script Template

-------------------------------------------------------------------------------------- This file contains SQL statements that will be appended to the build script. Use SQLCMD syntax to include a file in the post-deployment script. Example: :r .\myfile.sql Use SQLCMD syntax to reference a variable in the post-deployment script. Example: :setvar TableName MyTable SELECT * FROM [$(TableName)]

--------------------------------------------------------------------------------------

*/

Using Pre and Post Deploy Scripts

These files are interpreted as SQLCMD scripts by SSDT. You can use most valid SQLCMD syntax within the script file to include other scripts and set variables. At the time of this writing, you cannot use the

:ON ERROR IGNORE command within a script. This will hopefully be addressed in a future release of SSDT.

Pre-Deploy scripts will always run before the schema changes are run. If you alter the database in a way that changes the schema, you may encounter errors with the release. E.g., if you make schema changes to the Person.Person table, but drop that table in a Pre-Deploy script, your publish action will likely fail.

Likewise, Post-Deploy scripts will always run after the publish action. This makes them a great place to insert new data, make small adjustments to the system, or perhaps implement custom security and permissions. The caveat to using post-deploy scripts to make changes is that the changes need to be repeatable. If you write an insert statement for a new lookup value, that same insert will run next time unless you check for the existing value first.

To create a new script to run in pre or post deploy:

- Right-click the appropriate folder (Pre-Deploy, Post-Deploy, other)

- Choose to add a "script" and select the "Script (Not in build)" option

- Give it a name, preferably one without spaces as it will make it easier to run the pre or post deploy script.

- Add your code changes. You may want to give it some descriptive comments and sometimes a Print statement can be helpful if you want to see progress as you run a deploy script manually.

- Make sure that you can re-run this script without causing errors! Check for data that may already exist, use outer joins, wrap your code in Try/Catch - whatever you need to do to make sure that you can have this run again if necessary.

- After saving the script, edit your pre or post-deploy script and add a new line in a non-commented area something like:

Run a script called "MyScript"

- This assumes that your script is in the same folder as your pre or post-deploy scripts. Adjust the relative paths as needed for the script you created.

- Save your deploy script.

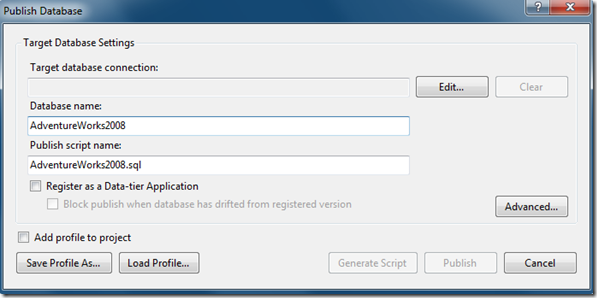

The next time you publish your project, it will pick up this script and include it in your change set. If you choose to create a script on publish, you can see the full text of your scripts included in the pre or post-deploy section of your change script.

Cleanup of Pre and Post Deploy Scripts

There are several options for cleanup, but one of the best suggestions I've seen has been to generate project snapshots and then remove the script references from your pre/post deploy scripts and the script files themselves from the project. They will still be saved in the snapshot, but will not be in your project anymore. You may be able to manage this well through your Version Control System, but snapshots do have some advantages.

Pros:

- Your project will remain somewhat clean.

- Script files will be saved with their appropriate snapshot, ideally tied to a particular release.

- Less concern about whether a script is replayed because it's removed.

- Good for scripts that have a larger impact and should only be run one-time.

Cons:

- It's a manual process and requires some attention to detail.

- You need to look in the snapshots to see what scripts were used.

- It may require that you have a more formalized release process.

- You may need to publish several snapshots to bring the database up to a current version.